Querying S3 Data With Redshift Spectrum

Image of colorful lights over a subway sign by Quijano Flores

Image of colorful lights over a subway sign by Quijano Flores

VSCO uses Amazon Redshift Spectrum with AWS Glue Catalog to query data in S3. This piece describes steps taken to adopt Redshift Spectrum for our primary use case — behavioral events data, lists subsequent use cases, and closes with tips we’ve learned along the way.

Behavioral Events Data

The first use case focuses on how we updated the transformation and loading of behavioral events for analytics.

The Problem

In late 2018, VSCO’s behavioral event data was growing quickly. Increased user engagement across VSCO’s mobile and web platforms was great news, but we wanted to limit storage costs for the rising influx of event data. Let’s first overview the data’s upstream origins before describing the transform and load steps of that behavioral events data pipeline.

Behavioral Event Origins

When people interact with VSCO on our web or mobile platforms, their actions trigger the platforms to record event messages. For example, editing a photo will result in an event message describing which preset was used. The event message is sent to an API that publishes it to a Kafka topic. A consumer reads batches of event messages from the topic and writes them as Apache Parquet files to AWS S3. We call these unprocessed Parquet files “alpha” data.

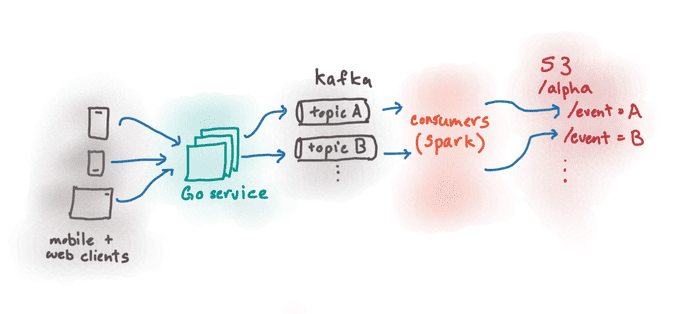

Diagram: Behavioral event origins and upstream pipeline

Phones and the VSCO website send behavioral event messages to a Go service. The Go service sends the messages to Kafka topics matching the event types. Spark consumers read from the Kafka topics and write the data to S3 at example prefixes

Phones and the VSCO website send behavioral event messages to a Go service. The Go service sends the messages to Kafka topics matching the event types. Spark consumers read from the Kafka topics and write the data to S3 at example prefixes alpha/event=A and alpha/event=B

This part of the behavioral events data pipeline continued to serve our needs and was not updated to protect storage costs. The raw “alpha” data remained the source data for both versions of the downstream pipeline.

Transform & Load into AWS Redshift

Our old pipeline read raw “alpha” data from one AWS S3 location and wrote processed “beta” JSON files to another. The processing step deduplicates events, removes unsupported characters, updates a few field types, and flattens nested fields. From the “beta” S3 location, the JSON data was copied into Amazon Redshift tables. Amazon Redshift served as our analytics database, where Data Scientists and Analysts used the event tables for ad hoc exploration and building downstream tables.

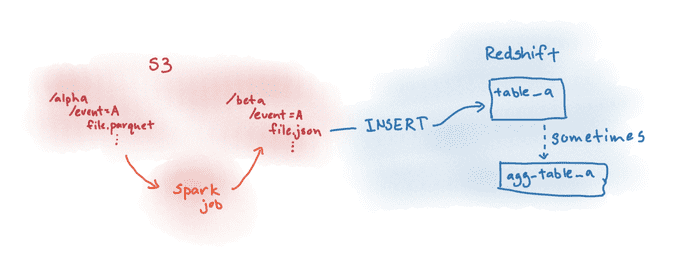

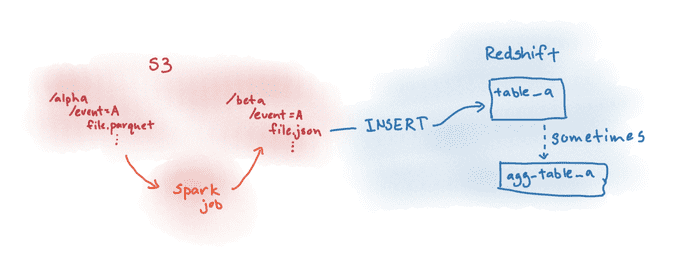

Diagram: Transform & load to Redshift pipeline

This diagram depicts transform and load steps for an example behavioral event type “A”. A Spark job reads Parquet files from an S3 prefix

This diagram depicts transform and load steps for an example behavioral event type “A”. A Spark job reads Parquet files from an S3 prefix alpha/event=A. It processes the data and writes it as JSON files to S3 prefix beta/event=A. Then, the JSON files are inserted into a Redshift table. Sometimes, aggregate data from ‘table_a’ is stored in a Redshift table ‘agg_table_a’.

Amazon Redshift Storage

Our Amazon Redshift cluster comprises DC2 nodes, which store data locally for fast performance. Storing all the behavioral events data in the Redshift cluster enabled speedy query responses, at the cost of adding new nodes whenever disk space ran low. We were concerned to see disk space use growing more quickly than our compute needs, especially after we found most behavioral events tables were infrequently queried. Some were used to update downstream tables that tracked company metrics or experiment results in daily or hourly intervals. Many had query logs showing a few ad hoc investigations spread over many weeks. We saw that we should preserve query access to the data, but were paying too high a cost for its storage.

What if we could query the “beta” data directly instead of storing it all in our AWS Redshift cluster? Storing more data in S3 would cost significantly less than adding more Redshift nodes (see Redshift pricing and S3 pricing documentation). We could then reserve Redshift cluster storage for only the most frequently queried data.

Solution

Introducing Amazon Redshift Spectrum

Amazon Redshift Spectrum is a feature of Amazon Redshift that enables us to query data in S3. Our most common use case is querying Parquet files, but Redshift Spectrum is compatible with many data formats. The S3 file structures are described as metadata tables in an AWS Glue Catalog database. Within Redshift, an external schema is created that references the AWS Glue Catalog database. The external schema provides access to the metadata tables, which are called external tables when used in Redshift. Those external tables can be queried like any other table in Redshift. External tables can even be joined with Redshift tables. The familiar table interface meant introducing Redshift Spectrum would require minimal onboarding for data consumers.

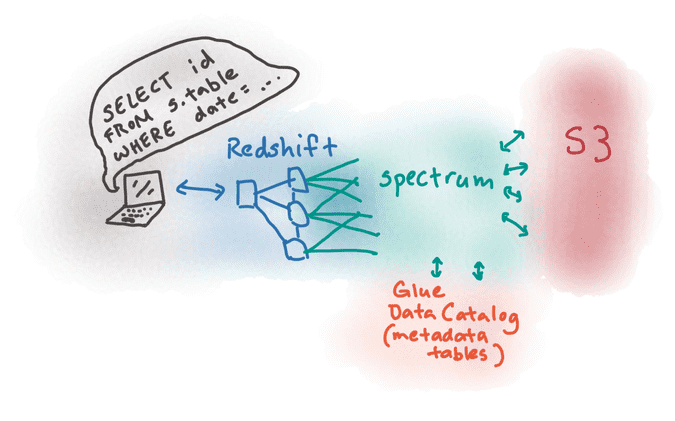

Diagram: Redshift Spectrum overview

A user queries Redshift with SQL

A user queries Redshift with SQL SELECT id FROM s.table WHERE date=.... Redshift is connected to the Redshift Spectrum layer. The Redshift Spectrum layer can access metadata in a Glue Data Catalog as well as files in S3.

The costs for Redshift Spectrum are determined by the amount of data scanned during queries. If S3 data is in a columnar format (like Apache Parquet files), only the specified columns in a query are scanned. This cost structure is a good fit for infrequently queried historical event data. It encourages us to keep most data in S3 and only promote recent or often-queried data to Redshift cluster storage.

Adopting Redshift Spectrum for behavioral events data

A few incremental improvements paved the way to our current behavioral events storage solution.

1. Updating the “beta” file structure

Recall Redshift Spectrum scans only the columns needed if the data is in a columnar format. To decrease the data scanned during queries, we converted the “beta” files from JSON to Apache Parquet. We also used snappy compression to reduce the Parquet file sizes. Date partitions were included in the “beta” data S3 prefixes and external tables to limit data scanned.

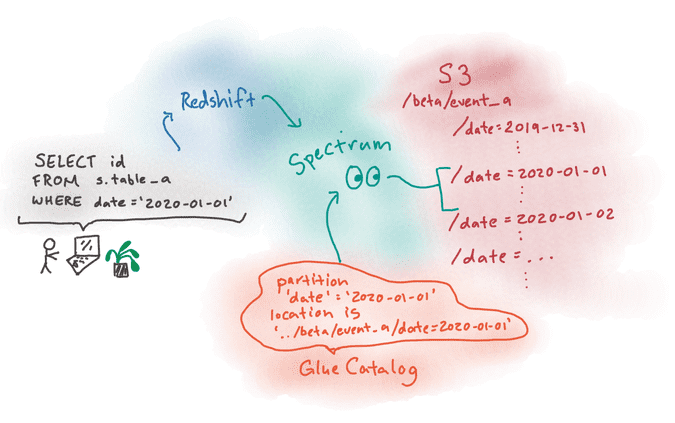

Diagram: Using date partitions for Redshift Spectrum

This image depicts an example query that includes a “date” partition. A user queries Redshift with SQL: “SELECT id FROM s.table_a WHERE date=’2020-01-01’“. The Redshift Spectrum layer receives the query, and looks up the date partition with value ‘2020-01-01’ in the Glue Catalog. It gets the S3 location ending in the prefix ‘beta/event_a/date=2020-01-01’. Then, the Redshift Spectrum layer only scans data in the given S3 location.

This image depicts an example query that includes a “date” partition. A user queries Redshift with SQL: “SELECT id FROM s.table_a WHERE date=’2020-01-01’“. The Redshift Spectrum layer receives the query, and looks up the date partition with value ‘2020-01-01’ in the Glue Catalog. It gets the S3 location ending in the prefix ‘beta/event_a/date=2020-01-01’. Then, the Redshift Spectrum layer only scans data in the given S3 location.

2. Permitting querying across AWS accounts

Our S3 buckets that house the alpha and beta Parquet files reside in a separate AWS account (Account A) from our AWS Redshift cluster (Account B). This presented a permissions challenge.

We decided that table metadata should reside in the same account as the data it describes, so Glue databases live in Account A with the S3 data. This gave us the option to move away from Redshift or deprecate Account B in the future while continuing to query our S3 data with Amazon Athena using the same Glue databases.

Redshift users query with Redshift Spectrum in Account B. They needed access to the Glue database to read the table’s metadata for the S3 files, and permission to read the files from S3.

Permissions setup:

- We created an

account-b-redshiftservice-linked IAM role in Account B. - An IAM role

account-a-s3-gluewas created in Account A. We granted the role access to Account A’s Glue database and S3 locations. - We created a trust relationship allowing the

account-b-redshiftrole to assume theaccount-a-s3-gluerole.

After permissions were in place, we tied them together by creating an external schema in Redshift. The external schema chains the roles in the iam_role parameter. This allows account-b-redshift to assume the account-a-s3-glue role whenever a Redshift user queries the external schema.

create external schema example_schema from data catalog

database 'exampledb' region 'us-west-2'

iam_role 'arn:aws:iam::123456789012:role/account-b-redshift,arn:aws:iam::210987654321:role/account-a-s3-glue';We added a Terraform module and documentation to quickly create new Glue databases, IAM roles, and IAM policies for future cross-account Redshift Spectrum use cases.

Results

Diagram: Transform & load to Redshift pipeline (before Redshift Spectrum)

Description: Transform & load to Redshift pipeline (before Redshift Spectrum)

This diagram is a copy of the “Transform & load to Redshift pipeline” from the “Problem” section. For an example behavioral event type, a Spark job reads Parquet files from an

Description: Transform & load to Redshift pipeline (before Redshift Spectrum)

This diagram is a copy of the “Transform & load to Redshift pipeline” from the “Problem” section. For an example behavioral event type, a Spark job reads Parquet files from an alpha S3 prefix and writes JSON files to a beta S3 prefix. The JSON files are then inserted into a Redshift table.

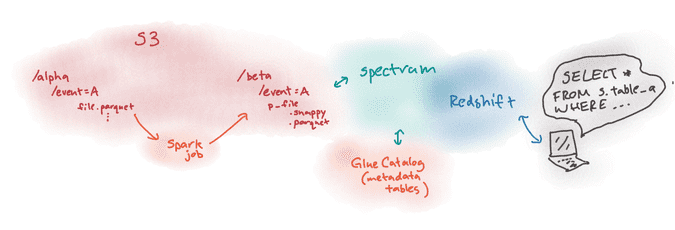

Diagram: Querying behavioral events data with Redshift Spectrum

Image description: A Spark job reads Parquet files from S3 prefix

Image description: A Spark job reads Parquet files from S3 prefix alpha/event=A and writes processed snappy compressed Parquet files to S3 prefix beta/event=A. Files in the beta/event=A prefix can be queried using Redshift Spectrum. The step inserting data into Redshift is absent.

Redshift Spectrum liberated us from depending on the Redshift cluster for data storage. We removed the “load to Redshift” step from our behavioral events pipeline and limited purchasing more nodes for the Redshift cluster. Storing Parquet files in S3 described in a Glue Catalog also gives us the options to use Amazon Athena for querying and add on more AWS Glue features in the future.

Subsequent Redshift Spectrum Use Cases at VSCO

Using Redshift Spectrum to query data in S3 helps us make data more accessible, more quickly than before. We no longer have to consider the storage costs of moving data sets into the Redshift cluster to make them queryable. The Data Engineering team now enables query access to S3 files in any supported format. This empowers Data Scientists and other data consumers to explore large historical data sets and get earlier insight into new data.

Queryable logs

Problem: We needed access to database changelogs for investigations and table replication.

VSCO developed an in-house service that reads the changelogs from our MongoDB and MySQL tables, writes the logs to Kafka, then stores them in S3 as Parquet files. With Redshift Spectrum, we provided query access to those logs. This has enabled ad hoc exploration of historical data and investigations of anomalies. We also use scheduled queries to update Redshift replications of the MongoDB and MySQL tables.

Third-party data

Problem: For each new third-party data source, we created a different process to make the data accessible.

We now implement a simple pattern for new third-party data sources:

- There is an existing “third-party” S3 bucket and “third-party” Glue database. Existing IAM roles and policies allow Redshift users to read data from the S3 bucket and update tables and partitions in the Glue database.

- Data from a new third-party source is stored in a “third-party” S3 bucket at a prefix like

source_name/raw - External tables and partitions for the new data are created for the

source_name/rawdata, giving query access to Data Scientists. - Processed versions of the third-party data are stored in the “third-party” S3 bucket at prefixes like

source_name/v=[version] - External tables and partitions for the new version are created for the

source_name/v=[version]data, allowing Data Scientists to easily review the changes.

Immediately after we gain access to a new data source and export it into S3, we can provide query access to the data with Redshift Spectrum. As we process the raw data into different versions (also written to S3 as Parquet files), all versions of the data can be easily queried.

Benefits:

- Provides quick query access to raw data dumps for Data Scientists. This enables faster iteration and collaboration between the Data Science and Data Engineering teams.

- If desired, raw and processed data can be queried from S3.

Tips

We compiled these tips based on learnings gained during our implementation:

- If you know the partition values and locations, it is fast and inexpensive to add them using SQL or the AWS Glue API. We either run a script to add many partitions at once, or schedule the additions with Apache Airflow tasks.

- Glue Crawlers can be defined to periodically crawl through new data and create relevant partitions for you. A crawler processes (and downloads if the data is compressed) all new files to infer their structure. For large data, running the crawlers becomes costly.

- “MSCK REPAIR TABLE” in Athena scans a file system (like S3) and adds missing partitions. Running this command repeatedly would keep partitions up to date. However, the AWS docs note “it is possible it will take some time” and might time out. Many users complain it is slow.

- Values in the raw data may not be queryable by Redshift Spectrum. We ran into “Multibyte character load errors” before preprocessing the files.

- Use SQL extensions to query nested data with Redshift Spectrum.

- Use partitions when querying external tables. This limits the data scanned by Redshift Spectrum, thereby keeping costs down.

- This lab walks through adding partitions and demonstrates their impact on queries.

Resources

This AWS Big Data Blog post describes Redshift Spectrum and its benefits in more detail.

Acknowledgments

Editors:

- Kevin Johnson

- Shruti Sharma

- Zach Hodges

This post summarizes work done across many projects. Contributors and advisors for the projects in this post:

- Atsunori Kakitani

- Benji Hertel

- Connie Chen

- Desiree Cox

- Jack Schonbrun

- Jennifer Georgevich

- Kevin Johnson

- Lucas Kacher

- Melinda Lu

- Ryan Nguyen

- Vlad Losev

If you find this kind of work interesting, come join us!