Scaling Elasticsearch for Discovery at VSCO

VSCO Photo by benceszemerey

VSCO Photo by benceszemerey

Search at VSCO has transformed from a way to locate users and order history to a full-fledged image discovery system. In early 2013, we needed a way to find users who had registered to purchase VSCO Film on our website. The quickest way to solve this then was to perform queries across our MySQL user and order tables—and while not a scalable approach, it initially got the job done.

As we continued to grow and develop new products and services, it was no longer feasible to perform queries across growing relational databases with any sort of scale. In late 2013 we began exploring the use of Elasticsearch to:

- Improve our internal admin user search

- Allow for public search of profiles and images

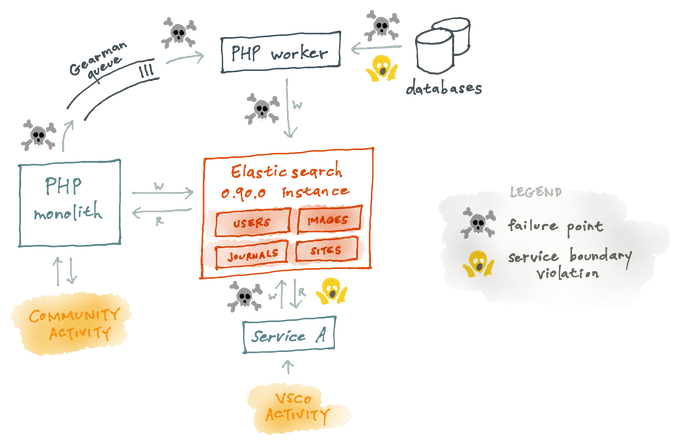

To tackle this we launched a single Elasticsearch 0.90 instance and ingested data with post-write calls from our monolithic PHP application. We walked our databases and hit the ground running. This allowed us to launch our first public Elasticsearch product, Map, Image and Grid Search, in February 2014.

First iteration of VSCO search service by (skulls by @skewleft)

First iteration of VSCO search service by (skulls by @skewleft)

This was an avenue to surface the most influential content on our platform in a way that would inspire our users to both continue to discover new content and continue to create on VSCO. All of the content we featured in search was hand-selected by our in-house curation team, and at the end of our initial cluster’s life, the instance was only serving somewhere in the neighborhood of 500K images.

Initial Problems

By late 2015 we looked back on our initial foray into Elasticsearch, and while it had treated us well (all on a single instance), we came up with a list of pain points and things we wanted to improve:

- Keeping distributed data in-sync and de-normalized can be difficult without a solid ingestion pipeline.

- Not providing strict index schema or having a migration plan was problematic with our rapidly increasing feature-set.

- Not defining service-boundaries or enforcing contracts leads to tight coupling.

Looking at our initial problems, and considering our growing product needs, we knew we needed to build a cluster that could handle a lot more data and provide strict ingestion and access patterns. In order to take Search to the next level, we needed to ensure that our search service could meet the following criteria:

- Continue to support internal Admin search.

- Ensure all user generated content is ingested into the search cluster.

- Allow us to run on easily scalable, cost-efficient infrastructure.

- Allow us to monitor search from all aspects, including: ingestion, indexing, searching and general cluster health.

- Abstract reads/writes to an independent application layer to enforce service-boundaries.

- Allow for service interruptions to indexing that would prevent lost updates.

- Allow for maintenance windows that do not jeopardize items making it into search or partial availability of search results.

- Enable simple incremental upgrades. Initially we looked into converting our standalone instance into a larger cluster with sharding and replication, but it turned out to be an impossible task due to the following:

- We didn’t have a way to take the cluster offline without downtime.

- Elasticsearch 0.90 was severely out of date at the time.

In 2015 there were only a few options for a self-hosted, scalable search experience. Due to our previous, mostly positive, experience with Elasticsearch and all of the new features added from 0.90–2.x we decided that it was a good fit for our needs moving forward.

The Next Phase

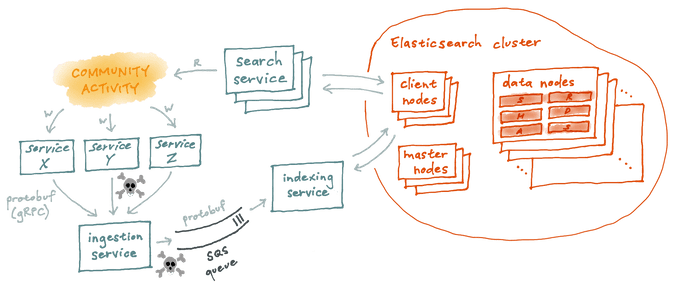

We decided the best way forward was to design and build a brand new Elasticsearch cluster alongside our legacy 0.90 instance. This allowed us to remain entirely online during the migration and provided us a way to start fresh without any of the baggage of the past. Our current solution architecture is a combination of Amazon Elasticache, SQS, Docker, Elasticsearch, Go, gRPC and Protocol Buffers.

All of the following is in service of our goal to get all user content indexed, and here are the details on how we did that.

Scalability

We built a custom Elasticsearch Docker image that we run on AWS hardware using EBS-backed storage. We allocate and manage infrastructure using Terraform and provision the base instance dependencies using Chef. We have independent scaling around all of our Elasticsearch nodes and Go services:

- 3 Master Nodes

- 3 Client Nodes

- 30 Data Nodes (and growing)

- Search Service

- Ingestion Service

- Indexing Service

Service Boundary Abstraction (Go Services)

In our initial Elasticsearch deployment, it wasn’t feasible to define service boundaries. During the initial phases of deconstructing our PHP monolith, services that needed access to search could not shoulder the overhead of performing requests to our PHP monolith. To get around this latency issue they would access the cluster directly. To regain control over our search cluster, we needed our service layer to be scalable and performant. We wrote three independent Go services that would control the abstraction necessary for others to communicate with our Elasticsearch data.

Ingestion Service (Go)

Leveraging the work we have done for gRPC @VSCO, we were able to easily build, deploy and implement a robust ingestion pipeline that relied on gRPC and protocol buffers to ensure coherent communication between our monolith and the new search services we built to replace parts of it. Rather than define a new schema for our search data, as we had done in the past, we defined protocol buffers to model our user data at the service level and we ingest the same protocol buffers that the services use to define their models.

We ingest our data through updates sent over gRPC to a Go Ingestion Service from our monolithic PHP application and Core API replacement microservices. The service places the item(s) as protocol buffers on an SQS Queue for processing individually or in bulk.

We use retries in the case of ingestion service interruptions and we backfill to address missing data due to upstream provider issues.

Indexing Service (Go)

One of the most important problems we set out to solve was dropped inserts and updates from our applications to Elasticsearch. By fronting our indexing service with an Amazon SQS queue, we now have the ability to disable indexing at any time and not lose incoming documents / updates.

Based upon the incoming item data-type, we query Elasticsearch for a matching document. If found, updates are merged in (if they pass validation) and then persisted back to Elasticsearch. If the change requires a fanout of de-normalized data, items are added back to the SQS queue to be processed in the background. We throttle/stop indexing through our DCDR feature-flag framework.

Search Service (Go)

We built a Search Service that provides our only access point for performing reads on the cluster. This allows us to disable endpoints, throttle endpoints, provide public or private access to specific endpoints, and stat and monitor everything.

Our mobile and web clients communicate directly with our Search Service, bypassing legacy routing through our monolith. Our Admin communicates with the Search Service over gRPC, and provides access to the now larger store of user data, helping our Support team find users on the platform.

Migration Framework (Go)

In a world where settings and schemas are created and applied via HTTP requests, we decided it was important to define a structured way to manage cluster state.

Based upon concepts from Ruby’s Rake and Doctrine’s Migration Framework, we built a system to handle schema changes and migrations programmatically. All of the changes we have historically made to the cluster are defined in migration files and their status is maintained in a state-store.

Federation

Out of the box Elasticsearch does not have authorization or authentication for HTTP requests. In order to work towards our service-boundary ideals, we put our new Elasticsearch cluster in a separate AWS Security Group with only the Search and Indexing Services being provided explicit access.

Maintenance / Upgrades

We designed our cluster with the ability to allow for rolling upgrades without any downtime by leveraging sharded indexes and replication across multiple AWS availability zones.

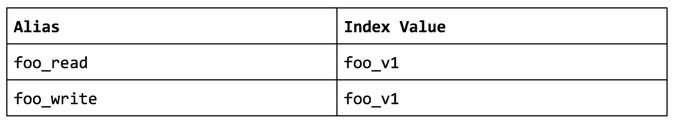

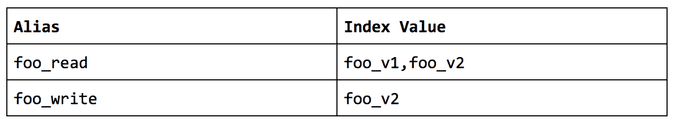

This is possible partly through the use of index aliases to read/write all data to and from the cluster.

For example: start with a foo_v1 index and create foo_read and foo_write aliases that point to the foo_v1 index. Your application should use foo_read as its read path and foo_write as the write.

When the foo_v1 index grows too large or you need to make a material change to the mappings add a foo_v2 index and then update the read / write aliases as follows:

This performs the following function:

- All new writes will fill the foo_v2 index

- All reads will look across both indices

By operating in this manner, we can modify the pointer for the underlying index(es) opaquely to any application logic which makes it possible to modify mappings or scale without requiring application changes or deploys.

Achievements / Future

Four years ago we could only dream of the Elasticsearch cluster we operate today. Now as we move towards even larger audiences, we can ensure that their content is all discoverable on the platform. In the end, what did we achieve? We are now running an Elasticsearch cluster that:

- Handles 25MM read and 5MM index requests per day.

- Maintains an over-1,000X increase of stored documents and counting.

- Can easily scale any part of the infrastructure on-demand.

In addition to our ability to scale and maintain a large cluster, the ownership of Search and Discovery has moved to the Machine Learning team where we have been able to build many cool features leveraging search including:

- Using our Ava technology to augment image search

- Trending hashtags

- Related images

- Using search to easily prototype new recommendation services

I look forward to VSCO’s future with Elasticsearch and specifically the replacement of our Ingestion Service with a Kafka pipeline. This will allow us to:

- Remove blocking RPC updates

- Easily re-index from a given point in time

- Maintain a truly up-to-date caching layer

- Move to true immutable infrastructure for search which will allow us to maintain full availability during any maintenance windows.

Thanks for reading! If you find this kind of work interesting, come join us!